🔐 Building a Tailscale Subnet Router in Azure Container Instances

If you've ever needed a secure gateway into your Azure Resources via Tailnet... look no further!

Have you ever wanted to securely access private Azure resources without deploying VPN gateways, NAT appliances, or punching holes in NSGs... then this one is for you!

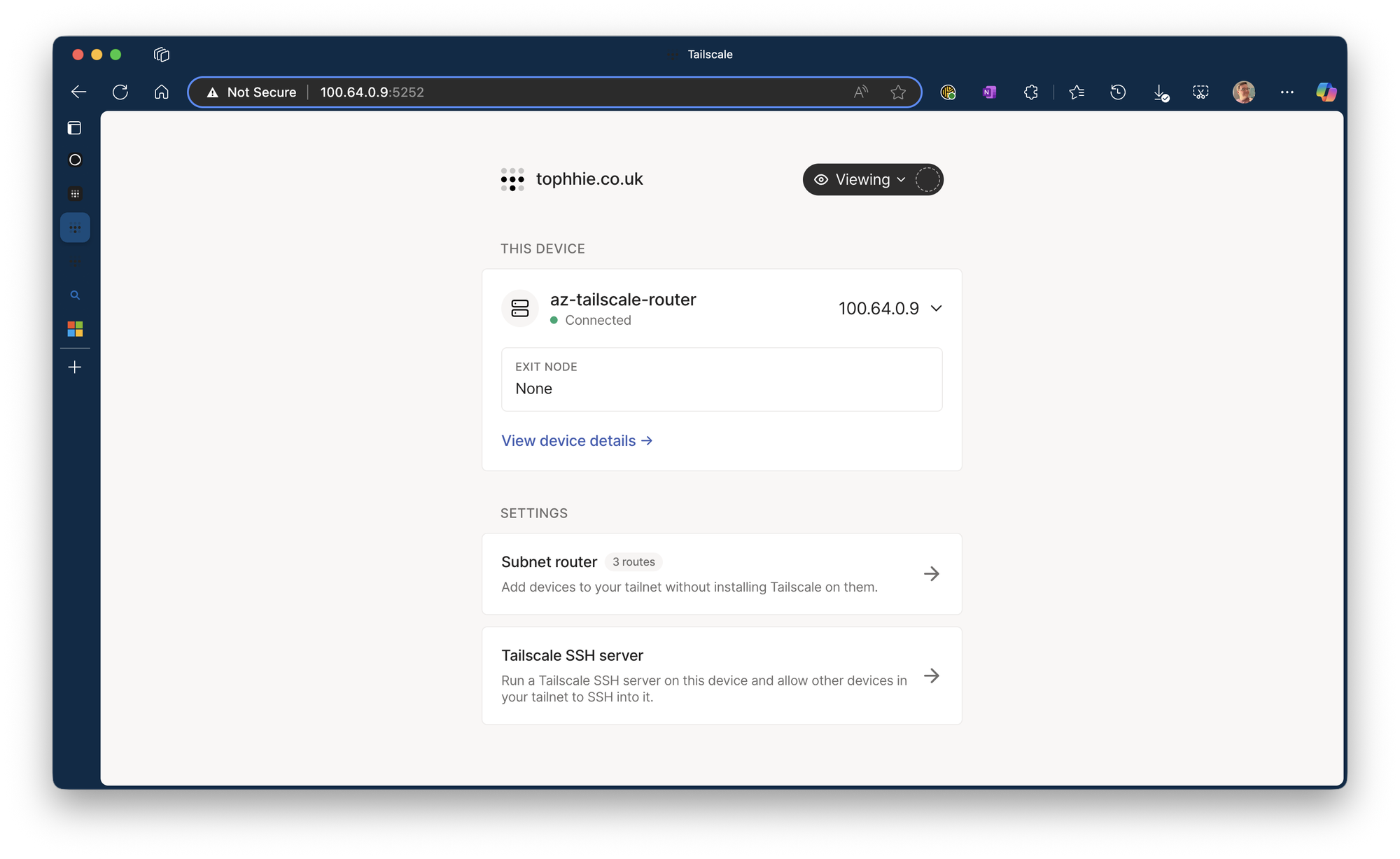

In this post, I explore and walk through how I deployed a fully functional Tailscale subnet router - with the web admin UI - inside Azure Container Instances (ACI). And yes, it works! Even the admin interface on port 5252.

🌐 What We're Building

We're deploying a Tailscale subnet router that:

- Authenticates with a Tailscale auth key

- Advertises one or more Azure subnets

- Runs in ACI with persistent state via Azure File Shares

- Pulls the container image from Azure Container Register (ACR) using a user-assigned managed identity

- Exposes Tailscale's admin interface on port 5252

🧱 What Azure Resources Will We Need?

- Azure Container Registry (ACR)

- Azure Container Instance (ACI)

- Azure File Share (for Tailscale state)

- Azure Virtual Network + Subnet

- User-assigned Managed Identity

🐳 Docker Image

The Docker image built and stored within ACR, is based on Alpine and installs Tailscale manually:

FROM alpine:latest

RUN apk add --no-cache bash curl iptables

# Install Tailscale

RUN curl -fsSL https://pkgs.tailscale.com/stable/tailscale_1.84.0_amd64.tgz | tar -xz -C /usr/local/bin --strip-components=1

# Add startup script

COPY start.sh /start.sh

RUN chmod +x /start.sh

ENTRYPOINT ["/start.sh"]start.sh

#!/bin/sh

set -e

echo "[INFO] Starting tailscaled..."

tailscaled --tun=userspace-networking &

sleep 3

# Set defaults if not provided

: "${TS_HOSTNAME:=az-tailscale-router}"

: "${TS_ROUTES:=10.1.0.0/24,10.0.0.0/24,10.99.0.0/24}"

echo "[INFO] Using hostname: $TS_HOSTNAME"

echo "[INFO] Advertising routes: $TS_ROUTES"

if [ -z "$TS_AUTHKEY" ]; then

echo "[ERROR] TS_AUTHKEY is not set. Exiting."

exit 1

fi

echo "[INFO] Bringing up Tailscale node..."

tailscale up \

--authkey="$TS_AUTHKEY" \

--advertise-routes="$TS_ROUTES" \

--hostname="$TS_HOSTNAME"

echo "[INFO] Enabling Tailscale web interface..."

tailscale set --webclient

echo "[INFO] Tailscale is running. Keeping container alive..."

tail -f /dev/nullLet's run through the start.sh:

- First, we start by configuring Tailscale to run in userspace-networking mode.

- We then configure some default environment variables.

- One configures the hostname of the subnet router.

- The other lists the Azure subnets we're wanting to be routable.

- We then check if the TS_AUTHKEY (we'll come to this) environment variable has been set, if not, we bail.

- We then start up Tailscale!

- Then, we enable the admin interface.

- Finally, we keep the container alive.

🚀 Deploy Script (PowerShell)

Here's the deployment script I use in Azure Cloud Shell:

function Deploy-TailscaleRouter {

## Azure Resources

$tsAuthKey = Read-Host -Prompt "Provide Tailscale Auth Key"

$resourceGroup = "Internal_Services"

$routerName = "az-tailscale-router"

## Determine image to be deployed

$imageTag = Read-Host -Prompt "Enter image tag (default: latest)"

if (-not $imageTag) { $imageTag = "latest" }

$image = "myacrinstance.azurecr.io/az-tailscale-router:$imageTag"

## Fetches the Containers subnet

$vnetName = "Internal_Services-vnet"

$subnetName = "Containers"

$subnet = az network vnet subnet show --resource-group $resourceGroup --vnet-name $vnetName --name $subnetName | ConvertFrom-Json

$subnetId = $subnet.id

## Identity

$identity = $(az identity show --name nameOfUserManagedIdentity --resource-group Internal_Services --query id -o tsv)

$storageAccountKey = $(az storage account keys list --account-name storageaccountname --resource-group Internal_Services --query [0].value -o tsv)

## Remove previous container

az container delete --name $routerName --resource-group $resourceGroup --yes

## Deploy new container

az container create `

--resource-group $resourceGroup `

--name $routerName `

--image $image `

--cpu 1 `

--memory 1.5 `

--os-type "Linux" `

--location "uksouth" `

--restart-policy "Always" `

--subnet $subnetId `

--assign-identity $identity `

--acr-identity $identity `

--ports 5252 `

--environment-variables `

TS_AUTHKEY="$($tsAuthKey)" `

--azure-file-volume-share-name "filesharename" `

--azure-file-volume-account-name "storageaccountname" `

--azure-file-volume-account-key $storageAccountKey `

--azure-file-volume-mount-path "/var/lib/tailscale"

}

Deploy-TailscaleRouter- The script starts by asking you to provide the Tailscale Auth Key, this is generated from the Tailscale admin portal.

- It then configures some necessary variables, including:

- The resource group to deploy to.

- The container instance name.

- It then asks for the image tag of the docker image, defaulting to "latest".

- It then configures some more variables, including:

- The vNet name.

- Subnet name.

- The actual Azure subnet.

- The Azure subnet ID.

- It then fetches the user-assigned managed identity, so the ACR can be accessed.

- We then, remove the old container instance (if it exists).

- Then, deploy the new container instance.

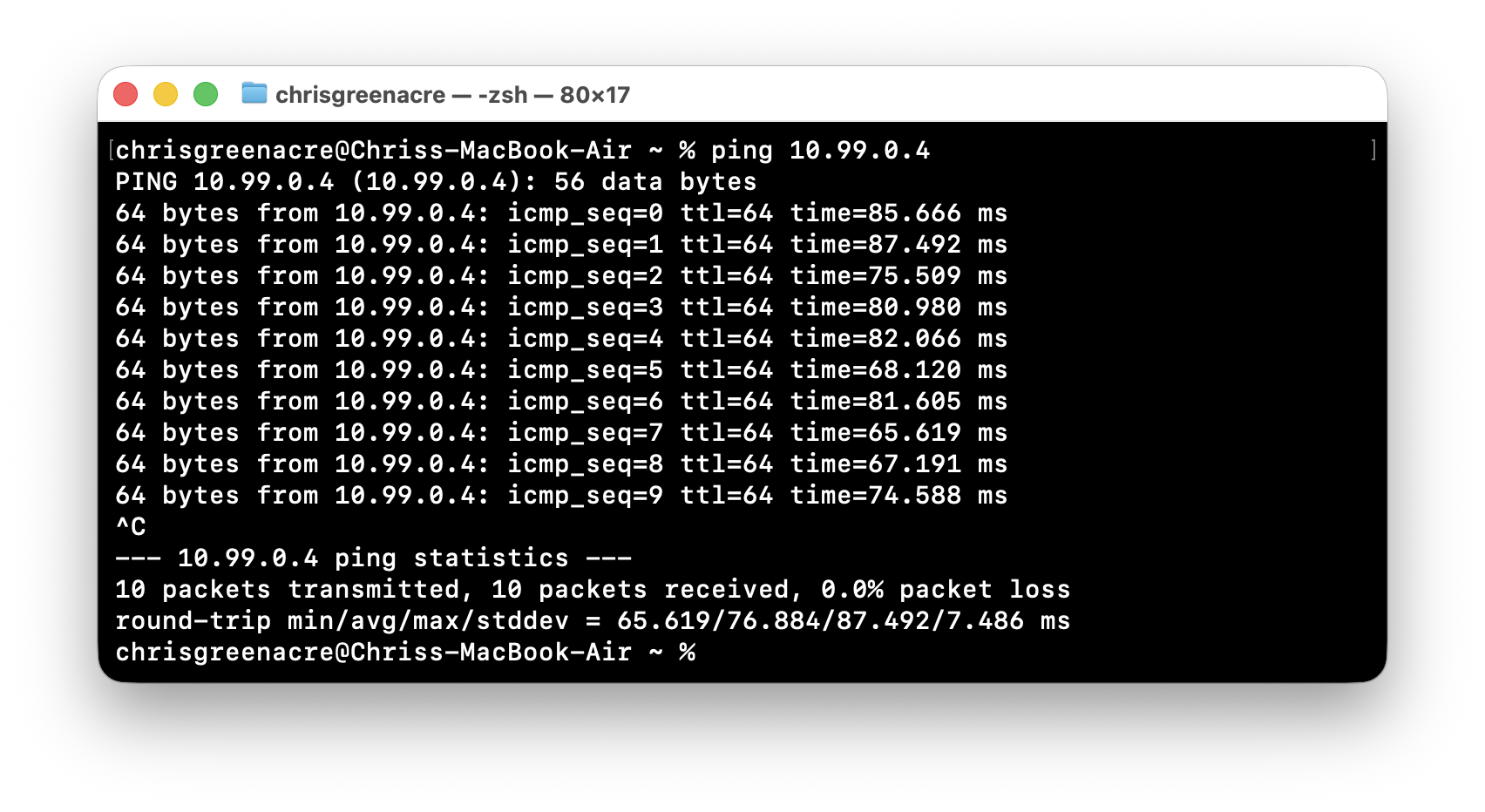

🌈 The Result

After deployment:

- The node appears in the Tailscale admin portal.

- Routes are advertised and can be approved.

- Port 100.x.x.x:5252 from a Tailscale devices shows the web UI.

- Access to Azure Resources via private IPs just work!

🚫 What This Setup Doesn't Do

- No exit node support - ACI doesn't allow NAT / IP forwarding therefore it cannot be used as an exit node.

- No persistent logs unless you attach additional storage/logging.

- You can see this is done in the script above, with the "azure-file-volume" parameters, but this isn't a hard requirement.

- No inbound port access from the public internet (by design).

✅ What This Setup is Perfect For

- Secure, cost-effective access to Azure vNets from anywhere.

- Internal dev environments, private APIs, Azure SQL, or jumpboxes.

- Fully automated deployments and restarts via Azure CLI or DevOps.

🏁 Wrap Up

Now I've managed to deploy a Tailscale Subnet Router via Azure Container Instances, I can always access my private Azure Resources via my Tailnet whenever I need to, just by connecting to my Tailnet on any of my devices.

If I ever need to expose a new subnet, I just edit my start.sh, commit the changes, that triggers a docker build and upload to ACR, and then I can manually recreate my container when needed!

In future, I'll be automating this, so that changes I make to the start.sh are automatically built, pushed, and redeployed in one fell swoop. But for now, this is enough to get me started!

And you can do this too... this post serves to show that it can be done, and shows some basic steps to get started, but if you have any questions, are stuck, or just need assistance, give me a shout!

Until next time 👋🏻